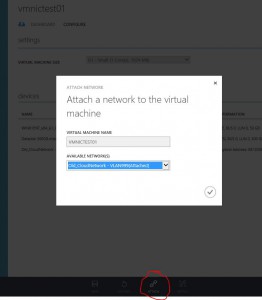

When a user is using AzurePack to add additional Virtual Network Adapters to a Virtual Machine, they end up with a Dynamic MAC Address. This is regardless of what the settings are in the VM Template that were used to create the VM. The NIC(s) created at deployment of the VM, will honor the setting in the Template. It’s just when additional NICs are added this happens.

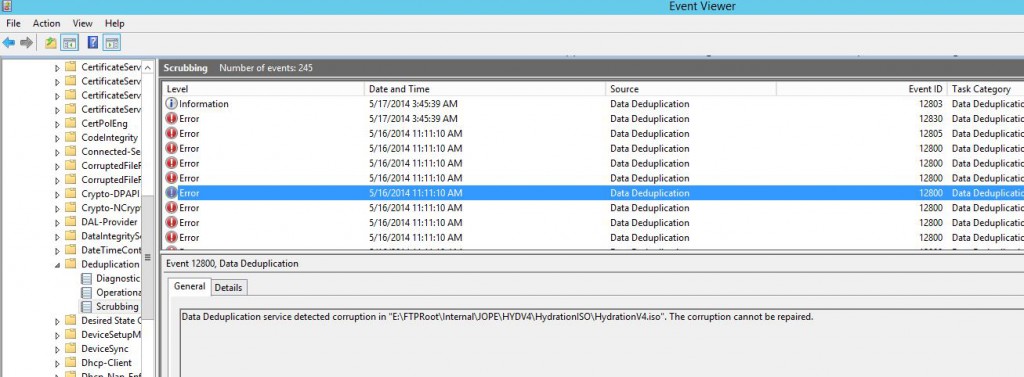

We have had some issues with VM’s using Dynamic MAC Addresses, where they got a new MAC Address after migrating to another host, resulting in Linux machines being unhappy and som other servers getting new DHCP Addresses.

I figured that this could be an excellent task to get more familiar with SMA and use that cool feature of Azure Pack. So I made a script which will execute when a new Network Adapter is added to a VM through AzurePack, and will set the MAC Address to a Static entry and let SCVMM pick one from the pool.

|

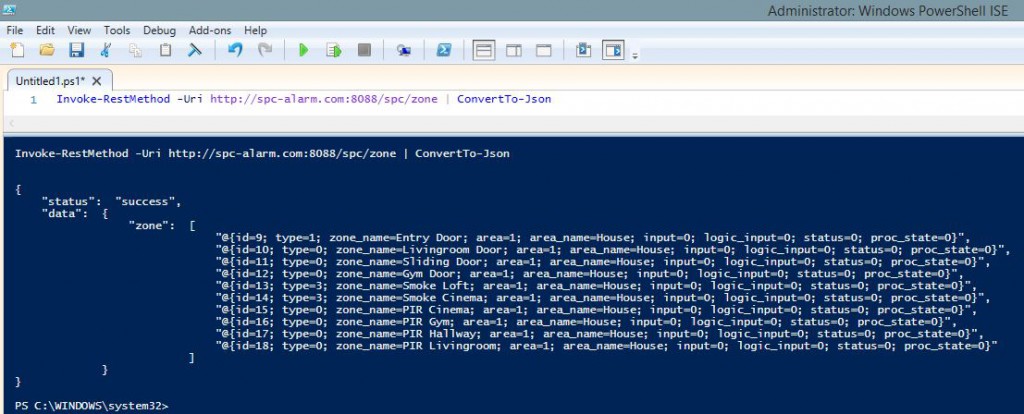

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

# SMA Runbook script to change VM NIC to Static MAC Address. workflow New-NetworkAdapter { # Import information in Parameters passed to script. param([object]$params) # Connection Credentials. Need to exist in SMA Assets as VMMConnection. $con = Get-AutomationConnection -Name 'VmmConnection' $secpasswd = ConvertTo-SecureString $con.Password -AsPlainText -Force $ruSMAcreds = New-Object System.Management.Automation.PSCredential ($con.username, $secpasswd) # Execute commands on SCVMM Server. Connect with VMMConnection Credentials InlineScript { $con=$USING:Con $ruSMAcreds=$USING:ruSMAcreds $params=$USING:params Invoke-Command -ComputerName $con.computername -Credential $ruSMAcreds -ArgumentList ($params.VMId),$con -ScriptBlock { param( $VMID, $con ) # Check if VM is already running, then first try a Shutdown, and else do a power off. # The user could have been quick and started the VM before the SMA Job starts. $restart = $false if ((Get-SCVirtualMachine -VMMServer $con.computername -ID $VMID).VirtualMachineState -ne "PowerOff") { $restart = $true Get-SCVirtualMachine -VMMServer $con.computername -ID $VMID | Stop-SCVirtualMachine -Shutdown } if ((Get-SCVirtualMachine -VMMServer $con.computername -ID $VMID).VirtualMachineState -ne "PowerOff") { $restart = $true Get-SCVirtualMachine -VMMServer $con.computername -ID $VMID | Stop-SCVirtualMachine -force } # Change to a Static MAC Address for all NICs on that VM. Get-SCVirtualMachine -VMMServer $con.computername -ID $VMID | Get-SCVirtualNetworkAdapter | where {$_.MACAddressType -EQ "Dynamic"} | Set-SCVirtualNetworkAdapter -MACAddressType "Static" # Start VM if it was previously running. if ($restart -eq "$true") {Get-SCVirtualMachine -VMMServer $con.computername -ID $VMID | Start-SCVirtualMachine } } } } |

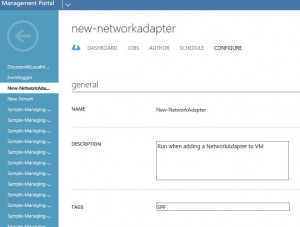

You will need to create a new Runbook called New-NetworkAdapter with tag SPF, and paste the above code into that runbook.

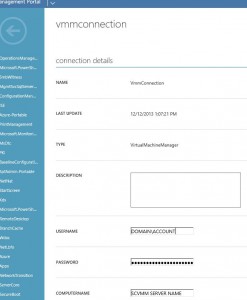

And also add a SMA Connection Asset, with credentials for connecting to SCVMM.

And also add a SMA Connection Asset, with credentials for connecting to SCVMM.

Name the connection VmmConnection. The script will look for a connection object called VmmConnection, use that Username + Password to connect to the SCVMM Server specified in the same connection object.

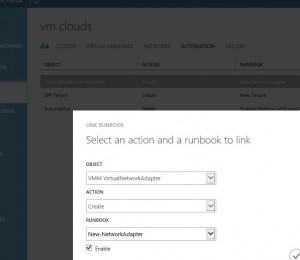

And finally, create an Automated Task of this information.

Please let me know if you find this useful, if you have any issues or suggestions on how to improve my script.