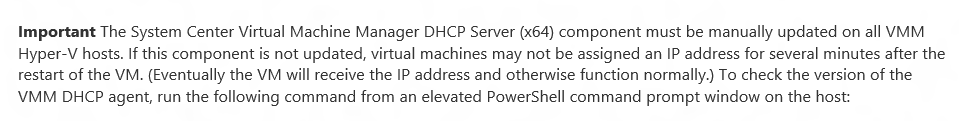

I’ve been to a couple of customers in the past month who has applied Update Rollup 3 for System Center 2012 R2 Virtual Machine Manager, through WSUS, but didn’t read the fine print.

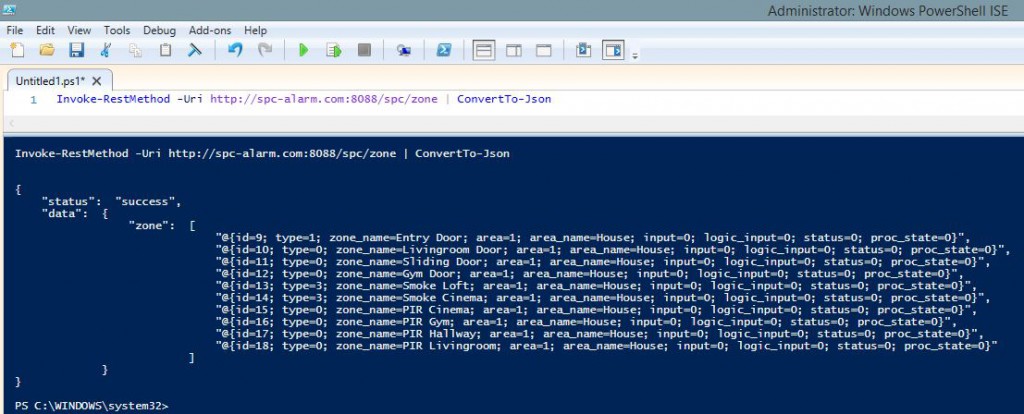

So I wrote a quick script to locate all Hyper-V Hosts with the old/incorrect version.

|

1 2 3 4 5 6 7 8 9 |

# List all VM-Hosts with an old version of SCVMM DHCP Extension # Currently, the latest version is 3.2.7672.0 # Specify your VMM Server Name $VMMServer = "VMMServerName" Invoke-Command -ComputerName (get-scvmhost -VMMServer $VMMServer).Name -Command { (Get-WmiObject -Class win32_product | where {$_.Name -like "Microsoft System Center Virtual Machine Manager DHCP Server*" -and $_.Version -ne 3.2.7672.0} ) } |

And the next step was obviously, how to update the agent on all the Hyper-V hosts remotely and automatically!

There are a couple of different ways to do this, let me go through a couple of them.

One of the easiest ways is to use Sysinternals PSExec, just run psexec against those servers and execute uninstall of the old and installation of the new agent. In my humble opinion, it’s too much manual work to do it this way with a lot of hosts. So I rather use Powershell.

Looking at the above Powershell example, you almost have a full script for doing the rest.

Have a look at this;

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# Check for old SCVMM DHCP Server Agent, uninstall old version, install new version. # Specify your VMM Server Name $VMMServer = "VMMServerName" Invoke-Command -ComputerName (get-scvmhost -VMMServer $VMMServer).Name -ArgumentList $VMMServer -ScriptBlock { Param ($VMMServer) $old = (Get-WmiObject -Class win32_product | where {$_.Name -like "Microsoft System Center Virtual Machine Manager DHCP Server*" -and $_.Version -lt "3.2.7672.0"}) $setup = ("\\"+ $VMMServer +"\c$\Program Files\Microsoft System Center 2012 R2\Virtual Machine Manager\SwExtn\DHCPExtn.msi") if(Test-Path -PathType Container ("\\" + $VMMServer + "\c$") -Verbose){ Write-Output "$env:ComputerName sucessfully connected to $Setup" if (($old.Version).Count -eq 1) { $version = $old.version $name = $old.Name Write-Output "Found old version of $Name $version. Uninstalling it." ((Get-WmiObject -Class win32_product | where {$_.Name -like "Microsoft System Center Virtual Machine Manager DHCP Server*" -and $_.Version -lt "3.2.7672.0"})).Uninstall() Write-Output "Done Uninstalling" Write-Output "Installing newest version of $Name from SCVMM Server" Start-Process "$setup" /qn -Wait } else { Write-Output "Couldn't connect to install share, aborting"} } } |

Word of warning, the above script should be considered a “proof of concept” or give you a rough idea of how to do it. I’ve run it once, and it did work so it will hopefully work for you too.

There is a minor problem with the above solution. That script will do something called a “double hop”. It’s when you run something on Computer A, which gets executed on Computer B which in turn tries to connect to Computer C and use the credentials provided in A. Two hops, aka double hop.

In the above script, it’s when it’s accessing the install files from a remote share.

And to solve that problem you have to enable something you might have heard about, called Kerberos Constraint Delegation on all Hyper-V hosts (or other servers you want to double hop via).

In most environments KCD is not enabled, so the above script would not work to 100%. In fact, the uninstall would work, but not the installation so would will end up with a server that’s missing the DHCP Agent.

In case you ran the script without reading this part or before adding KCD, I added a small safeguard against that by doing a Test-Path before uninstalling the agent which probably told you it failed.

My good friend and college Mikael Nyström wrote a great blog post here recently on how to rather utilize CredSSP instead of using KCD for tasks like this.

And here is a slightly modified script using CredSSP instead of KCD.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 |

#Set default values # Specify your VMM Server Name $VMMServer = "VMMServerName" $Servers = (get-scvmhost -VMMServer $VMMServer).Name $InstallPath = "\\" + "$VMMServer" + "\c$" $FQDN = "$env:USERDNSDOMAIN" $Cred = Get-Credential -Message "Type your Admin credentials used to connecting to other servers" #Set Config for client (where you execute the script) Disable-WSManCredSSP -Role Client Enable-PSRemoting -Force Enable-WSManCredSSP -Role Client -DelegateComputer ("*."+$FQDN) -Force Get-WSManCredSSP #Configure the Servers to accept CredSSP Credentials foreach ($Server in $Servers) { Invoke-Command -ComputerName $Server -ScriptBlock { Enable-WSManCredSSP -Role Server -Force Get-WSManCredSSP } } #Upgrade old version of agent. foreach ($Server in $Servers) { $ServerFQDN = $Server Invoke-Command -ComputerName $ServerFQDN -Authentication Credssp -EnableNetworkAccess -Credential $Cred -ArgumentList $Server,$InstallPath -ScriptBlock { Param ($Server,$InstallPath) $old = (Get-WmiObject -Class win32_product | where {$_.Name -like "Microsoft System Center Virtual Machine Manager DHCP Server*" -and $_.Version -lt "3.2.7672.0"}) if (($old.Version).Count -eq 1) { # Verify that we can access C$ on SCVMM Server for installing the agent. if(Test-Path -PathType Container $InstallPath -Verbose){ Write-Output "$Server sucessfully connected to $InstallPath" $version = $old.version $name = $old.Name Write-Output "Found old version of $Name $version. Uninstalling it." ((Get-WmiObject -Class win32_product | where {$_.Name -like "Microsoft System Center Virtual Machine Manager DHCP Server*" -and $_.Version -lt "3.2.7672.0"})).Uninstall() Write-Output "Done Uninstalling" Write-Output "Installing newest version of $Name from SCVMM Server" $setup = ($InstallPath +"\Program Files\Microsoft System Center 2012 R2\Virtual Machine Manager\SwExtn\DHCPExtn.msi") Start-Process "$setup" /qn -Wait } else { Write-Output "Unable to connect to Installation Location at $InstallPath" Write-Output "Found old version of $Name $version. But did not uninstall it." } } } } |

Word of warning, the above script should be considered a “proof of concept” or give you a rough idea of how to do it. I’ve run it once, and it did break anything in that environment, so it might work for you too.

Basically, the script will enable CredSSP on the computer you run it on, and allow the credentials to be used on all remote servers that’s part of your domain. It will then connect to all Hyper-V hosts known by SCVMM and enable those as Credential Receivers.

Following that part, it will once again connect to those servers and check if the SCVMM DHCP Agent is outdated and if it’s able to connect to the install location (SCVMM Servers C$ Share).

I made sure it verifies that it can connect to the install location before uninstalling the Agent. Because in case it can’t connect to SCVMM Server, I would rather have an old DHCP Agent, than no agent at all.

And finally, it’s uninstalling the old agent and installing the new one.

Done!

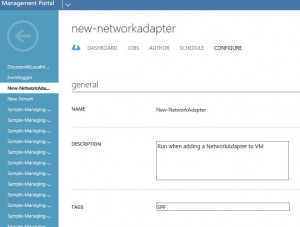

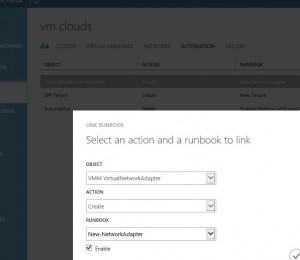

It’s also possible to use SCVMM’s Job function to schedule a job to be executed on all Hosts. But I’ll cover that in some future post.